Supporters, opponents argue racial preconceptions skew pretrial assessments

By: Michaela Paukner, [email protected]//September 21, 2020//

Supporters, opponents argue racial preconceptions skew pretrial assessments

By: Michaela Paukner, [email protected]//September 21, 2020//

As calls for racial justice intensify throughout the country, so have efforts to reform aspects of the criminal justice system, including pretrial-detention practices that keep hundreds of thousands of legally innocent people behind bars.

The Prison Policy Institute reported pretrial detention is responsible for nearly all of the net growth in the jail population in the past 20 years. Seventy-four percent of the U.S. jail population — 550,000 people in total — haven’t been convicted or sentenced.

When deciding who should be detained and who should be released, many courts rely on pretrial-assessment systems. These are meant to offer an unbiased, objective means of estimating the chances that a defendant will fail to appear for future court dates, commit new crimes or commit new violent crimes after release.

Some argue the systems are actually making racial discrepancies worse. One such group is the American Bail Coalition, an association of national bail insurance companies and bail agents. Jeff Clayton, executive director of the coalition, said pretrial-assessments systems have racial bias built into them

“Nobody bothered, when they built these things, to test for racial bias or think about racial bias, and now that’s come to the forefront,” Clayton said.

One of the most widely used systems is the Public Safety Assessment, which was developed by the Laura and John Arnold Foundation. In Wisconsin, the PSA is used in Milwaukee, Dane, Outagamie and La Crosse counties.

Advancing Pretrial Policy and Research, an initiative paid for by the PSA’s developers, provides guidance to organizations on the proper use of the system. Alison Shames, co-director of APPR, said the PSA is objective.

“In terms of our validation studies that have been done to date, these show that the PSA predicts equally well for people across race and ethnicity,” Shames said.

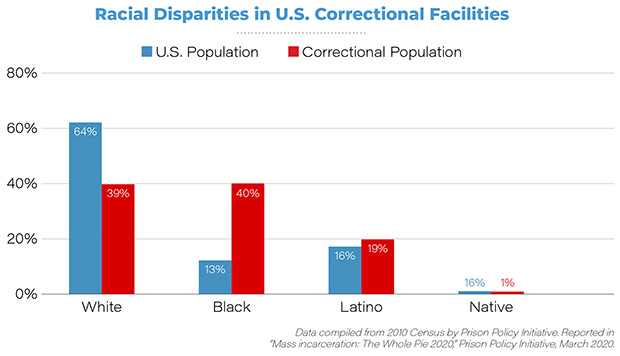

Trouble arises, though, when bias stems from the data that are coming into the system. This can happen, for instance, when the police arrest people of color at disproportionate rates, Shames said.

“The challenge is that bias is endemic to the criminal justice system and to the data itself,” Shames said. “The data that comes into this tool has the bias of whatever the community might have.”

What the PSA does

The PSA uses a defendant’s criminal history to come up with a score that judges and court commissioners can use to make decisions. In doing so, it considers nine factors (see infographic).

Each is weighted and assigned points. The factors are then converted to scales of 1 to 6 for “Failure to Appear” and “New Criminal Activity” categories. A person can also be flagged for risk of new violent criminal activity.

The criteria don’t change. But when a court makes use of the PSA, it also develops a custom chart to help judges and court commissioners interpret what bearing each score might have on a defendant’s prospects for release.

The chart, known as the Release Conditions Matrix, varies from place to place depending on what resources can be found in each. For example, some jurisdictions may carry out criminal history checks once a month and require electronic monitoring or drug testing for certain scores.

APPR strongly recommends jurisdictions work with local policymakers and residents on the matrix. Shames said the organization also recommends every jurisdiction complete its own validation study to find out how accurate the PSA is at the local level.

APPR publishes the results of validation studies on its website. These usually vary from one place to the next, as do the reports’ contents. Some counties have assessed the PSA through the lens of race, whereas others have considered populations as a whole.

Of the five studies on the website specifically analyzing the PSA, three considered the effect defendants’ race might have had on outcomes. The studies found no statistical difference in the rates of pretrial release among the various groups.

PSA in practice

Clark Rodgers is one of two people in Dane County who put data into the PSA to obtain defendants’ scores.

He has decades of experience working with pretrial assessments, first with U.S. Probation and Pretrial Services for nearly 23 years. He was then hired as a pretrial assessor in Dane County in 2017, when the county adopted the PSA.

Rodgers said the county runs the assessment on criminal felonies resulting from new arrests, new arrests with holds and new arrests with probation holds — although there are some exceptions.

A person is determined to have undergone a new arrest when he or she has been in custody for less than three days. On the weekend of Aug. 29-30, Rodgers said 29 of the people arrested qualified for PSAs.

An assessment typically takes 15 minutes to an hour to complete. Rodgers and Maggie Thomas, the other pretrial assessor for Dane County, use data from the Spillman jail management program, CCAP and other records databases to complete their assessments. They also obtain out-of-state records to learn whether points should be assigned.

“We try to maintain really close fidelity to what the application says,” Rodgers said. “The evidence-based practices stuff relies on the fact that a program is only as good as your application to the program.”

Maintaining fidelity means Rodgers sometimes has to investigate what records the PSA is using to assign a score. Bad results can occur if the system gets duplicate information for the same defendant or pull up warrants issued in error. At such times, Rodgers will turn to a reference guide listing various risk factors and outcomes as defined by the PSA researchers and Dane County Circuit Court. If questions still remain, his practice is to err in the defendant’s favor.

“If you have history that you can’t verify, then it goes to the benefit of the defendant to not score it because it gives them a lower score,” Rodgers said. “We’re not going to hold them to something that we can’t legitimately verify.”

In 2017, Dane County worked with Harvard University’s Access to Justice Lab and the Laura and John Arnold Foundation to study the PSA’s outcomes locally. Researchers finished the study in 2019 and are now compiling and analyzing the results.

Rodgers said he doesn’t track the outcomes of the PSA’s assessment — that’s done by the county’s equity and Criminal Justice Council coordinator — and he sees questions about racial disparities in outcomes as policy issues.

“Maggie and I try to keep our personal opinions out of it because we’re trying to be objective, and we take that really seriously,” Rodgers said. “We’re trying to make sure any bias we may have doesn’t affect the decisions we make.”

Predicting Pretrial Outcomes

Factors Considered by Public Safety Assessment Tool

1. Age at time of current arrest

2. Current violent offense

2a. Current violent offense and whether the person is 20 or younger

3. Pending charge at the time of the arrest

4. Previous misdemeanor conviction

5. Previous felony conviction

5a. Previous conviction (misdemeanor or felony)

6. Previous violent-offense conviction

7. Previous failure to appear in the past 2 years

8. Previous failure to appear that’s older than 2 years

9. Previous sentence to incarceration

Each factor is weighted and assigned different points according to the strength of its relationship with pretrial outcomes for failure to appear, new criminal activity and new violent criminal activity.

FTA and NCA points are converted to scales ranging from 1 to 6. Lower scores suggest a greater likelihood of pretrial success. NVCA points suggest the presence or absence of a “violence flag.”

Information from Advancing Pretrial Policy & Research website

‘Fatally flawed’ tool

Even before Dane County’s pretrial assessors start putting information into the PSA, Clayton and the American Bail Coalition believe they were set up for failure. He said the trouble lies in the algorithms that risk-assessment systems use to generate scores; they, he said, are inherently biased.

Clayton cited studies of the Colorado Pretrial Risk Assessment Tool and COMPAS Risk & Need Assessment Systems, both of which researchers had used to predict outcomes for defendants of various races. Clayton said the systems, when wrong, over-predicted risks concerning Black defendants and under-predicted risks for white defendants.

The creators of the PSA haven’t subjected the tool to such an analysis, Clayton said. Rather, they argue the tool’s accuracy is the same among racial groups.

Clayton said the PSA’s creators are OK with a tool that gets it wrong, as long as it gets it wrong at the same rate for each group of defendants. .

“Because the science breaks down, there’s no scientific way for judges to then correct it,” Clayton said. “That’s why the process is fatally flawed.”

Rather than rely on pretrial-risk assessments, Clayton said judges should be using their own discretion.

“There’s been no evidence that a tool does better than judicial discretion in terms of racial bias or any other measure that you want to use,” Clayton said. “We’ve spent millions of dollars to evaluate millions of defendants, and we’re wasting that money that could be spent on diversion, drug rehabilitation, etc.”

A method for consistent decisions

But such a reliance on judicial discretion alone is exactly what the PSA is meant to avoid.

“Without (the PSA), you have a judge making a decision based on their own biases and their own subjective experience,” Shames said. “They’re looking at the same data that goes into the tool, but they’re making their own decision about it, or they’re basing something on a charge and attaching a financial condition to it that may keep someone in jail.”

Shames said the PSA shows judges that most people won’t get arrested while on release and can also be counted on to show up for their court dates — no matter their race. Even so, she said, the system is only as good as its data.

“The PSA doesn’t produce the bias,” Shames said. “It is just using data that has some bias baked into it, but it is at least giving judges information to help them make more consistent and more transparent decisions.”

Shames said APPR does make it a point to talk to users about ways that bias can be introduced by whatever data are coming into the PSA.

“No tool can eliminate the racial bias that exists in a system,” Shames said “As jurisdictions confront that and their arresting and sentencing practices are reformed, then the data will be better going into the system, and the tool will better and accurately predict.”

APPR views the PSA as one way to help improve pretrial procedures.

“What this is really about is releasing people, not detaining people,” Shames said. “What we know from the data we have from PSA results and other data across the pretrial field is people on pretrial release succeed the vast majority of the time.”

Legal News

- Former law enforcement praise state’s response brief in Steven Avery case

- Eric Toney announces re-election bid for Fond du Lac County District Attorney

- Former Wisconsin Democratic Rep. Peter Barca announces new bid for Congress

- Republicans file lawsuit challenging Evers’s partial vetoes to literacy bill

- More human remains believed those of missing woman wash up on Milwaukee Co. beach

- Vice President Harris returning to Wisconsin for third visit this year

- Wisconsin joins Feds, dozens of states to hold airlines accountable for bad behavior

- Trump ahead of Biden in new Marquette poll

- Bankruptcy court approves Milwaukee Marriott Downtown ‘business as usual’ motion

- New Crime Gun Intelligence Center to launch in Chicago

- Arrest warrant proposed for Minocqua Brewing owner who filed Lawsuit against Town of Minocqua

- Wisconsin Supreme Court justices question how much power Legislature should have

WLJ People

- Power 30 Personal Injury Attorneys – Russell Nicolet

- Power 30 Personal Injury Attorneys – Benjamin Nicolet

- Power 30 Personal Injury Attorneys – Dustin T. Woehl

- Power 30 Personal Injury Attorneys – Katherine Metzger

- Power 30 Personal Injury Attorneys – Joseph Ryan

- Power 30 Personal Injury Attorneys – James M. Ryan

- Power 30 Personal Injury Attorneys – Dana Wachs

- Power 30 Personal Injury Attorneys – Mark L. Thomsen

- Power 30 Personal Injury Attorneys – Matthew Lein

- Power 30 Personal Injury Attorneys – Jeffrey A. Pitman

- Power 30 Personal Injury Attorneys – William Pemberton

- Power 30 Personal Injury Attorneys – Howard S. Sicula